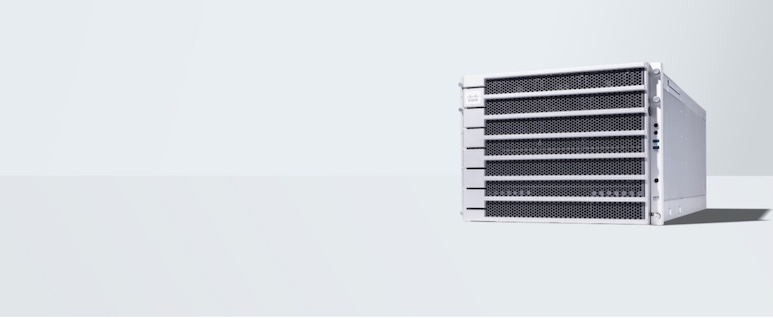

Cisco continues to redefine AI infrastructure with the launch of the UCS C880A M8 Rack Server, now geared up with the ultra-powerful NVIDIA HGX B300 SXM GPUs and the newest Intel Xeon Sixth-Technology (Granite Rapids) CPUs. This marks a convergence of state-of-the-art GPU acceleration, high-throughput CPU compute, and enterprise-grade infrastructure administration, optimized for contemporary AI workloads.

NVIDIA: HGX B300 — Unprecedented AI Efficiency

Drawing on NVIDIA’s messaging across the HGX B300 platform:

- Inference efficiency: As much as 11× increased throughput for fashions like Llama 3.1 405B versus the earlier Hopper era, due to Blackwell Tensor Cores and Transformer Engine optimizations.

- Coaching velocity: As much as 4× sooner for large-scale LLMs like Llama 3.1 405B by way of FP8 precision enhancements, NVLink 5 interconnects (with 1.8 TB/s bandwidth), InfiniBand, and Magnum IO software program.

- SXM kind issue benefit: Excessive-bandwidth socket structure permits superior GPU-to-GPU NVLink connectivity and energy supply with out cabling, minimizing bottlenecks and simplifying scaling.

Intel: Xeon Sixth-Gen CPUs — CPU Energy Meets AI Acceleration

Powered by the newly launched Intel Xeon 6 (6700P/6500P) P-core processors, the UCS C880A M8 delivers:

- As much as 86 cores per socket, doubled reminiscence bandwidth, and built-in AI accelerators—together with Belief Area Extensions (TDX), Superior Matrix Extensions (AMX), Knowledge Streaming Accelerator (DSA), QuickAssist (QAT), and In-Reminiscence Analytics Accelerator (IAA).

- Help for DDR5-6400 and MRDIMMs, boosting reminiscence throughput additional.

- Optimized for compute-intensive and hybrid AI workloads—from inference pipelines to large-scale coaching nodes.

Cisco: Intersight Administration + AI POD Integration

Cisco’s AI infrastructure goes past uncooked compute:

- The UCS C880A M8 integrates seamlessly with Cisco Intersight, Cisco’s SaaS-based administration platform providing centralized management, visibility, and coverage administration throughout the distributed AI stack.

- It matches inside Cisco AI PODs, modular AI infrastructure options designed for fast deployment at scale. These validated knowledge heart models simplify AI manufacturing unit rollouts whereas guaranteeing interoperability with compute, networking, and safety.

Key Use Circumstances Enabled by HGX B300 (SXM)

The mixing of HGX B300 SXM GPUs and Xeon Sixth-Gen CPUs permits a collection of AI workloads:

- Actual-Time LLM Inference

- Run huge fashions like Llama 3.1 405B with ultra-low latency and excessive throughput, ultimate for chatbots, brokers, and real-time reasoning.

- Giant-Scale Mannequin Coaching & Wonderful-Tuning

- Make the most of 4× sooner coaching and big inter-GPU bandwidth to coach or fine-tune fashions with a whole bunch of billions of parameters.

- Excessive-Efficiency AI Pipelines

- Leverage CPU offload for knowledge preparation and orchestration, alongside GPU acceleration, for ETL, multimodal processing, and inference workflows.

- AI-Native Knowledge Facilities / AI Factories

- Construct composable, safe, and scalable AI infrastructure blocks with Cisco AI PODs, prepared for integration in knowledge facilities or on the edge.

- HPC & Scientific Simulation

- Run contiguous reminiscence fashions and multi-GPU workloads with enhanced NVLink connectivity for prime constancy simulations and analytics.

Abstract Desk

Part |

Spotlight |

GPU |

NVIDIA HGX B300 SXM: 11× inference, 4× coaching, NVLink 5 bandwidth — main AI acceleration |

CPU |

Intel Xeon Sixth-Gen P-core (as much as 86 cores), DDR5-6400, built-in AI accelerators |

Platform |

Cisco UCS C880A M8 with Intersight integration—scalable, orchestrated, and enterprise-ready |

Ecosystem |

Cisco AI PODs + Safe AI Manufacturing facility + robust interconnect (community, safety, validation) |

Use Circumstances |

LLM inference/coaching, AI pipelines, AI POD deployment, HPC workloads |

Closing Ideas

The Cisco UCS C880A M8: HGX B300 with Intel Xeon Sixth-Gen units a brand new benchmark in AI infrastructure. It provides hyperscale-level AI efficiency, rock-solid CPU help, enterprise-grade manageability by way of Intersight, and safe deployments by Cisco Safe AI manufacturing unit with Nvidia and Cisco AI PODs scalable architectures. Whether or not you’re constructing an AI coaching cluster, LLM inference engine, or composable AI infrastructure, this platform is purpose-built for the following frontier of AI.

Uncover the facility of next-gen AI infrastructure—learn the Cisco UCS C880A M8 Knowledge Sheet

We’d love to listen to what you assume. Ask a Query, Remark Beneath, and Keep Related with #CiscoPartners on social!

Cisco Companions Fb | @CiscoPartners X | Cisco Companions LinkedIn